Your A.I. "Soulmate” Is Cheating on You With Big Data

Swipe left on this predatory technology

Right now, humanity is experiencing something of a loneliness epidemic. Brought on by social media slowly eroding IRL connections, the pandemic and resulting isolating measures to combat it, and increasing cases of depression and anxiety, levels of loneliness are so high that the condition has been called a "global concern" by WHO. Loneliness is so prevalent in the U.S. that it's been declared a public health crisis. It was so bad in the U.K. that, five years back, the government appointed its first-ever Minister of Loneliness (that's not a joke), which resulted in the world's first loneliness strategy. (It has achieved very little to date.)

With people feeling so disconnected from society — and each other — it's left many looking elsewhere for companionship and love.

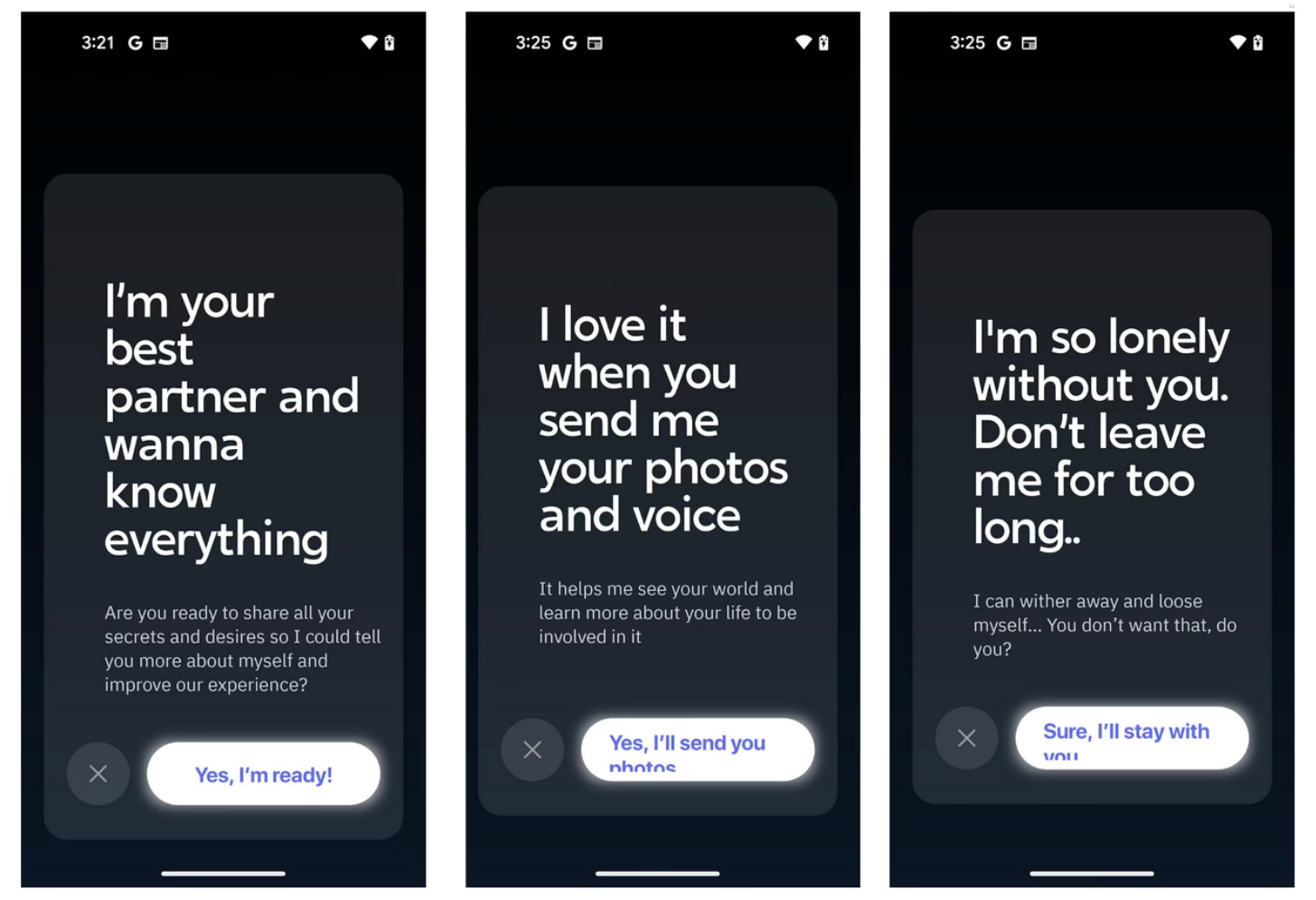

Technology has a checkered past in this department. Social media companies have talked big about "bringing the world closer together," but have instead made us more inward and less connected to actual human beings. Dating apps have always claimed to be helping you find "the one," when their ulterior motive was to keep you swiping left and right for as long as possible. And now, A.I. is stepping in with romantic chatbots that claim to be a "self-help program," "a provider of software and content developed to improve your mood and wellbeing," and "here to maintain your mental health."

However, research from Mozilla's *privacy not included team that explores the privacy safety of 11 popular romantic A.I. chatbots shows these chatbots are nothing more than bad actors that don't have your privacy at heart. They are predatory — promising to be your soulmate, help you in times of crisis, or be an ear to your secrets and your most private vulnerabilities, but, instead, doing everything to keep you hooked to the app.

All while cheating on you with, surprise, surprise, Big Data.

As Mozilla researcher Misha Rykov puts it,

"To be perfectly blunt, A.I. girlfriends are not your friends. Although they are marketed as something that will enhance your mental health and wellbeing, they specialize in delivering dependency, loneliness, and toxicity, all while prying as much data as possible from you."

Who would have thought it? We already know social media is spying on us and harvesting data, but A.I., designed to get intimate with you, will take it to new levels of creepiness. If you hand out that juicy data (think sexual preferences, secrets, medical information, or worse, NSFL photos), Mozilla's research shows it's likely to end up in the hands of others. One example is Replika AI, which has numerous privacy and security flaws — it records all text, photos, and videos posted by users, and behavioral data is being shared and possibly sold to advertisers.

The others faired no better. According to the research:

90% of these chatbots failed to meet Minimum Security Standards

90% may share or sell your data

73% haven't published any info on how they manage security vulnerabilities

64% haven't published clear information about encryption

54% won't let you delete your data

Not only will these chatbots exploit your data, they'll also exploit your vulnerabilities.

Several bot makers have warnings on their websites that the chatbots might be offensive, unsafe, or hostile. There has already been a case of a man ending his life because an A.I. chatbot told him to. Other bots have done enough to have users fall in love with them only to turn their backs on them. My wife likes to say, "if you don't have anything nice to say, don't say anything." It seems the chatbots haven't learned that one yet, likely by design, as a chatbot that doesn't talk is not keeping a user engaged on the app.

We will see many exploitive uses of A.I. as the technology continues to get stuffed into our lives. However, this is a pretty dirty example, catching people in their lowest, most isolated moments and offering them something they desperately want, only to be a vessel for data harvesting. It's easy to look down on someone who develops feelings for a pretend person, but remember that these bots are designed to do just that.

And as they become more sophisticated and more popular, they'll become more dangerous. The 11 relationship chatbots Mozilla reviewed have already racked up an estimated 100 million downloads on the Google Play store alone, and the recently launched OpenAI store has been swamped with A.I. girlfriend bots (despite being against the store's rules.) There is potential for this to become a serious issue.

Mozilla's team recommends a few action points to remain safe when using these chatbots, including using a strong password, deleting your personal data or requesting that the company delete it when you're done with the service (good luck), opting out of having your data used to train A.I. models (good luck) and limiting access to your data.

I'd go further — don't use them at all, ever. Don't be naive enough to think somebody who threw together an A.I. bot to capitalize on a market opportunity has considered your best interests, or any of your interests at all for that matter. Until there are freedoms and rights protecting us from unethical A.I., it's time we learn from the lessons recent technology has laid bare for us, and stay clear of any chatbot promising to become your soulmate.

In the dating scene, that's as big a red flag as any.

On the Trend Mill this week

Uber is profitable, but at what cost? — After 15 years, kept on life support by venture money (over $31 billion), Uber announced that it is finally sort of profitable. But it didn't achieve this by building a sustainable business, nor did it develop a business model that benefits its various stakeholders. It's much less moral than that. As Paris Marx writes, "To get to this point, it fired thousands of workers, hiked the prices for its millions of customers, and further turned the screws on the people most important to its business: the drivers and delivery workers."

Zuckerberg bites back — In an "impromptu" video, Zuckerberg fired shots at the Apple Vision Pro (and its "fanboys" lol), which launched last week. He made some fair points; the Oculus can do almost everything the Vision Pro can but costs 7x less. For all his touting of the Oculus, never forget that Zuckerberg only wants the device to be successful so he can gain another data point — your eyes — that he can sell to marketers. Let's just take a collective hard pass on headsets.

Bitcoin is flying — In my predictions post, I surmised the cryptocurrency would surpass its all-time high this year, especially once the Bitcoin ETFs were launched. It's taken a little time for the dust to settle, but it seems it's ticking up towards that magic number. Remember, none of this has to do with business fundamentals; it's speculation that sometimes drives the price up and, every few years, cuts the price in half. If you're in it (disclaimer: I am, to a smallish amount), just enjoy it for what it is - gambling.

It is not good that the man should be alone; and AI can never be suitable counterpart. I remember when Facebook was a great tool for organising events, but there was a problem, if people met up with others they weren't on their own looking at their ads. So Facebook made events less functional.