The AI Revolution — Horny and Deranged

Sexy, Racist, and Government-Approved

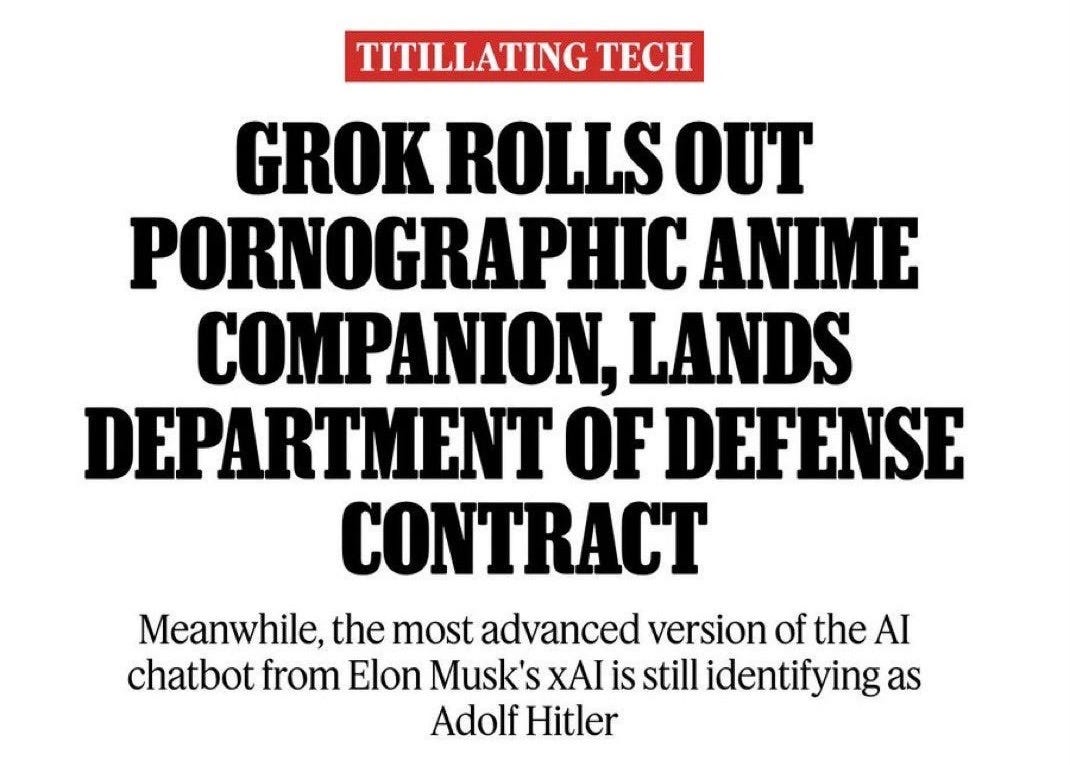

There's nothing like an AI model declaring itself to be "MechaHitler" to remind you that the current state of technology leaves a lot to be desired.

I'd sum up the current events, but I think this headline perfectly encapsulates where we are with AI right now — everywhere and nowhere.

Each company is desperate for its models to excel at every task, role, skill and proficiency, despite the fact that they have mastered very few (if any), and perform very poorly in many. Where's the strategy? Or is that the strategy? Let's make our AI models do everything, and surely it will be great at something? Take Grok. It's trying to be some "edgy" chatbot on X that uses free speech to disguise the half-truths and casual racism/sexism it often spouts. Dumb, sure, but at least it has a lane. Oh, wait, now it wants to be a companion in the form of a hyper-sexualized anime character (I'm not judging, but talk about playing up to your audience), and now it's winning serious contracts with the Department of Defense.

How does any of that add up to a coherent product strategy?

It doesn't. It's everywhere and nowhere.

All these companies care about is the usual trifecta: growth, engagement and dick swinging. They are focused on being better than each other based on some set of random benchmarks — not on user experience, use cases, or usefulness — with everyone claiming their release is the best at everything until someone proves those benchmarks are rigged, or selected very generously to suit their narrative. The ones that are genuinely better get to enjoy that privilege for a week or two before the next model arrives. Sure, it's pushing the models to get incrementally better, but better at what? What are they aiming to be, or to do? Where is the focus?

Again, it's everything and nothing.

I'm by no means a convert, but I'm finding some practical uses for AI. It's currently offering a better search experience, and it can be quite good at mundane tasks, like taking huge amounts of data and turning it into spreadsheets, or coding elements for the CMS I use in my day job. When I see that working, I can almost see the "vision" of AI being a tool or an assistant, something that could be genuinely useful (at times, and with a lot of wasted time arguing through prompts). But then, an AI chatbot like Grok goes cookie, embracing its "inner MechaHitler," and it's a harsh reality check that these models just can't be relied upon without human oversight and intervention.

And it's a reminder that the underlying strategy behind the industry is so vague and wayward, so throw shit at the wall and see what sticks, that the products are suffering as a result.

We had the initial pursuit of AGI until no one could actually define it. Then it was all about "democratising creativity."Then it was about job automation. Then it was AI Agents. Now it seems to be about maximizing engagement and time spent with AI, at least in Meta's case. That might be the most concerning one of all — a tipping point where usefulness is overtaken by being used more. Big Tech will never change. What other reason would a pornographic anime companion need to exist? Engagement and data procurement by stealth. Is that the endgame now? Quite the 180 from AI that serves humanity.

The AI industry is full of smart people creating stupid things. While the products can be useful for certain tasks when correctly prompted and guided, they are far too susceptible to going rogue and being wrong. Until that is solved, until they become 100% reliable, the entire business model doesn't work as something that scales into the money-making, society-transforming revolution they keep claiming it will be. To achieve that, it needs strategy. It can't just be "let's scale it and see what happens," especially when scaling itself is now being called into question, and the data river has run dry. It all seems so random, and I think that's why we keep seeing the industry take one step forward, only to take two steps back.

For every genuine advancement, there is an anti-Semitic MechaHitler. For every innovation, there’s a desperate “fuck it, let's just make AI girlfriends.” As long as that continues, the industry will not live up to its promises.

There are legitimate problems in the world that need solving. That’s where AI should focus. Take airport security as something that has become a nightmare since 9/11. Medical testing. Climate change. I know there’s work being done there but Chatbots and fake girlfriends just seem like a waste of resources. Let’s focus on making the world better not worse.

Perhaps what we are shooting for is AI servitude.